I recently wondered what would be involved in setting up a self signed HTTPS certificate to use with an internal WebAPI OWIN service that listens on a specific port number. Due to the interesting problems that cropped up as I went through the steps needed, I thought I would write them up as a reminder for myself in the future.

As we know, all services should allow you to connect to them over HTTPS if you are transferring over sensitive data (think passwords, etc). If you are in a situation where you are writing many local services, instead of adding every self-signed certificate you create to the trusted root store, a better alternative is to create your own root CA certificate.

This root CA certificate authority should be installed into the trusted store of the machine. What does this give us? Well, this way, each service would have a certificate of its own that is created/signed from our root CA certificate and because we have trusted the root cert all other certificates made from it will be automatically trusted (This is the chain of trust).

MakeCert

Now if you have created any certificates in the past you will have certainly come across the Windows makecert command. To create a CA certificate with this utility is pretty straightforward.

makecert.exe -r -n "CN=OceanAirdropCA" -pe -sv OceanAirdropCA.pvk -a sha512 -len 4096 -b 01/01/2019 -e 01/01/2040 -cy authority OceanAirdropCA.cer

pvk2pfx.exe -pvk OceanAirdropCA.pvk -spc OceanAirdropCA.cer -pfx OceanAirdropCA.pfx

pause

Then, later on when you want to generate client certificates from this CA cert, again, its pretty simple:

makecert.exe -iv OceanAirdropCA.pvk -ic OceanAirdropCA.cer -n "CN=OceanAirdropClient" -pe -sv OceanAirdropClient.pvk -a sha512 -len 4096 -b 01/01/2019 -e 01/01/2040 -sky exchange OceanAirdropClient.cer -eku 1.3.6.1.5.5.7.3.1

pvk2pfx.exe -pvk OceanAirdropClient.pvk -spc OceanAirdropClient.cer -pfx OceanAirdropClient.pfx

But the problem with the MakeCert utility is that its as old as gods dog and turns out that it doesn't populate certain fields needed to be validated by Chrome. Here's the error chrome gives you:

If you go to chrome it tells you that this certificate is not secure. This is because since chrome 58, you have to specify a subjectAltName as part of the certificate. But the makecert command does not allow you to generate a "Subject Alternative Name".

Using PowerShell to create our CA certificate

There are a couple of alternatives we could use to create the certs.. Either OpenSSL or Powershell.. This stack overflow post nicely explains how to create a self-signed cert using OpenSSL, but I opted to go the Powershell route.

Here's what to do. First start Powershell as administrator and issue each of the following commands in order:

// Step 01: Setup params for new self signed cert. Notice that the key usage can 'CertSign'

$params = @{

DnsName = "OceanAirdrop.com CA"

KeyLength = 2048

KeyAlgorithm = 'RSA'

HashAlgorithm = 'SHA256'

KeyExportPolicy = 'Exportable'

NotAfter = (Get-Date).AddYears(10)

CertStoreLocation = 'Cert:\LocalMachine\My'

KeyUsage = 'CertSign','CRLSign'

}

// Step 02: Actually create our CA cert and store it in the variable $rootCA

$rootCA = New-SelfSignedCertificate @params

// Step 03: Export the public CA key to file

Export-Certificate -Cert $rootCA -FilePath "C:\certs\OceanAirdropRootCA.crt"

// Step 04: Export the public/private key to file (as pfx file)

Export-PfxCertificate -Cert $rootCA -FilePath 'C:\certs\OceanAirdropRootCA.pfx' -Password (ConvertTo-SecureString -AsPlainText 'securepw' -Force)

The above commands first create a root CA certificate named OceanAirdropRootCA. It then exports the certificate to disk in both the .crt and .pfx file formats.

Now we have a root CA certificate, ho-ho-ho!

But, notice in the above picture, this certificate is not trusted on my machine.. That's because it needs to be installed into the root certificate store. So lets install the root CA key that this client key was generated from into the computers trusted certificate store.

I have found that you can type the command "certmgr.msc" from the start menu to access the certificates on your machine BUT by default it only shows your your personal certificates, but we want to install our certificate at the machine level.

So, to install the certificate type "mmc" from the start menu to bring up the mmc snap-in. Then click File->"Add/Remove Snap-In" where you will be presented with this dialog. Select Certificates and press "Add". From here, this will give you the option to select "Computer Account".

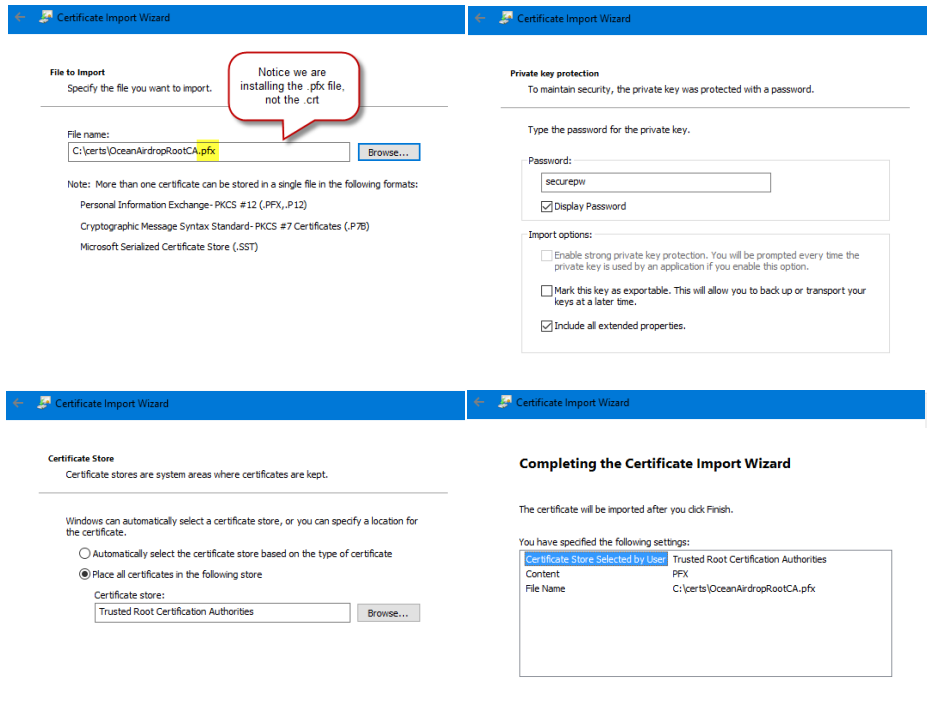

In the Trusted Root Certification Auuthorities, right click on the Certificate folder then select Import:

Then go through the wizard process:

Now, when we inspect our root certificate we can see that it is now trusted:

Using PowerShell to create a client certificate

At this point we have a root CA certificate that we can start using to mint/sign new client certificates. These client certs will be used by our OWIN services.

Again, open up Powershell and run through the following commands:

// Now at some point later on you might want to create another certificate that is signed by your CA key

// Step 05: First lets load the CA key from disk into the variable $rootCA

$rootCA = Get-PfxCertificate -FilePath "C:\certs\OceanAirdropRootCA.crt"

// Step 06: Setup params for new self signed cert.

$params = @{

Signer = $rootCA

KeyLength = 2048

KeyAlgorithm = 'RSA'

HashAlgorithm = 'SHA256'

KeyExportPolicy = 'Exportable'

NotAfter = (Get-date).AddYears(2)

CertStoreLocation = 'Cert:\LocalMachine\My'

}

// Step 07: Actually create cert and store in variable: $appCert1

$appCert1 = New-SelfSignedCertificate @params -Subject *.my.domain -DnsName my.domain, *.my.domain

// Step 08: Export the keys to file to store securlly

Export-Certificate -Cert $appCert1 -FilePath "C:\certs\appCert1.crt"

Export-PfxCertificate -Cert $appCert1 -FilePath 'C:\certs\appCert1.pfx' -Password (ConvertTo-SecureString -AsPlainText 'securepw' -Force)

When creating a client certificate, the majority of times the root ca, key length and expiry date hardly ever change... But the common name and DNS names do.. So below I declare some of the options that don't change for New-SelfSignedCertificate in a variable names "params". Then on the call to New-SelfSignedCertificate I speciffy the -Subject and -DnsName fields on the command line. Here's me running through those commands:

This produces a certificate that looks like this:

Notice that the Subject Alternative names are now correctly populated.

Registering the port with windows

Okay, at this point, we have created our certificates.. And I assume we have a WebAPI service running and listening on a particular port number. For us to use our client certificate, we need to register the port with windows, then bind the certificate to the service. Here's how to do that.

My service is going to be listening on port 9000 for https traffic.. Open up a command prompt and issue the following command:

netsh http add urlacl url=http://+:9000/ user=everyone

If at a later date you need to delete the reservation (as I did in testing) you can use this command:

netsh http delete urlacl http://+:9000/

// If you want to show a list of bindings:

netsh http show urlacl >c:\bindings.txt

start notepad c:\bindings.txt

Binding the certificate to the service

At this point, we have the client certificate in the machine and have registered our listening port with windows. The next thing we need to do is run a command to bind our new SSL certificate to our application port (9000).

netsh http add sslcert ipport=0.0.0.0:{{port} certhash={{thumbprint}} appid={{app-guid}}

There are three variables we need to plug into this command. The port number (which is 9000 in our case), the certifcate thumbprint and a guid. You can pick up the thumb print of the certificate from here (you just need to remove all the spaces from the string):

For the guid, you can either generate a random guid or you can pick up the application guid from your visual studio project:

Once you have got those 3 pieces of information, you would issue the command as below

If you are playing around a bit, at some point you will want to delete the binding. To delete a SSL certificate from a port number use the following command:

netsh http delete sslcert ipport=0.0.0.0:9000

Opening ports on the firewall

If you intend to access this service from another machine make sure you open the port in the windows firewall.. You can do this from the command line using these commands:

netsh advfirewall firewall add rule name="OceanAirdop_Svc_9000" dir=in action=allow protocol=TCP localport=9000

netsh advfirewall firewall add rule name="OceanAirdop_Svc_9000" dir=out action=allow protocol=TCP localport=9000

Back to the code

Now, if we jump back to our code, all we need to do is alter the base address from http to the new https

static void Main(string[] args)

{

try

{

string baseAddress = "https://L-03067:9000/";

// Start OWIN host

using (WebApp.Start(url: baseAddress))

{

Console.ReadLine();

}

}

catch (Exception ex)

{

}

}

Now when we visit the endpoint over http all works as intended!

WebAPI Exceptions

Okay, it wasn't all plain sailing... I encountered these exceptions along the way which may raise their heads.. If they do, here are the solutions

WebAPI Exceptions - Failed to listen on prefix

If, when you run your code, you get the following error: "Failed to listen on prefix"....

...check to make sure you have registered the correct http scheme. So it should be netsh http add urlacl url=https://+:9000/ user=everyone (notice the s) and not: netsh http add urlacl url=http://+:9000/ user=everyone

WebAPI Exceptions - Access Denied Exception

If you are debugging your service and get an "Access Denied" error back like this, make sure you start Visual Studio in Administrator mode.

You can do a quick check by looking at the title bar of Visual Studio. It should say (Administrator)

Wrap up!

Thats it... I can now visit my WebAPI service over https using a self-signed certificate!